In South Korea, a nationwide scandal has unnerved the public and sparked protests about the safety of women and girls. A digital sex ring permeated throughout colleges, high schools and even middle schools through the messaging app, Telegram, in which members would share pictures of their female peers’ faces stitched onto pornographic content. This crosses multiple lines of morality and consent, as women and girls now have to deal with yet another threat to their safety and bodily autonomy; AI. Mind you that South Korea shilled $1.6 million into funding the Metaverse which is frankly a waste of a lot of money. The country has been a strong advocate for an AI filled future despite the fact AI doesn’t exist to fix any current problems as it is to create “convenient shortcuts” for the privileged. It has only exacerbated class issues, impacting marginalized groups the most, and created even more problems along the way. Sex crimes, misinformation, copyright infringement, art theft, job loss, discriminatory stereotypes, and neutering of the creative industry are just a handful of issues that have sprung up from the rise of generative AI.

When the app FakeApp was introduced to the public in 2018, intense paranoia and concerns about the easy conjuring of deepfakes naturally arose. It’s tragically inevitable that with such powerful technology that continuously blurs the lines of fabrication and realism, will come sexually exploitative content, new uncanny forms of blackmail and harassment, and widespread misinformation. Just recently, Donald Trump posted an AI generated photo of Kamala Harris rallying a communist party on X and shortly after, Elon Musk partook in the political AI by producing a photo of Kamala in a “communist uniform”. Anti-republican netizens retaliated by sharing AI generated images of Elon and Trump in disparaging manners. Although the sentiment is fighting fire with fire, I can’t help but feel as if we’re becoming too comfortable with using generative AI despite the dangers it’s already manifesting in our real world. When we’re already so numb to using other people’s works and likeness on the internet, we don’t seem to recognize how our use of AI is borderline theft and manipulation. We may be able to discern that a photo of Kamala being a commie or Trump and Elon awkwardly kissing isn’t actually real, not because there are flaws in the AI generated photos (let’s pretend that there’s none), but because we have the critical knowledge and enough context to know better. But what about the people who don’t?

It may feel obvious to us but remember that there are people who couldn’t be bothered to fact check whether or not Imane Khelif is a trans woman. Even more concerning, people want to affirm their biases so when coming across a piece of misinformation that does just that, no matter how over the top, they will gleefully spread the false word. Two of my classmates exposed me to the infamous Australian break dancer in the 2024 Olympics (I’m not gonna link that one, feel free to look it up) and both had wild stories on how an amateur competitor wound up in such an elite event. One of them told me she was doing a “social experiment to write a paper on the cultural politics of competitive B-boying” (seemingly extracted from how the dancer apparently studied it, no actual source that says she was there for the sake of writing a paper) and the other told me she was a semi-finalist that beat out other dancers so her failure must’ve been intentional. I was bemused to the idea of someone using the Olympic stage for her own personal thought piece but after doing my own research, both their claims were unfounded. My peers were frustrated at the idea of someone deliberately losing at the Olympics and embarrassing her country but as far as we know, she was actually earnestly competing and lost the first round with zero points to her name. It goes to show how easily susceptible we are to misinformation, especially when it enacts strong emotions in us. Generative AI is incredibly popular amongst conservatives who rely on misinformation to bolster their following. Especially when conservatives are made up of older demographics who may have less knowledge on AI and have been conditioned to reject critical thinking, the use of AI images is incredibly scary when it’s weaponized to further a political agenda.

Besides AI in politics, the creative industry has been going down a dark path for the last few years; mass layoffs, dozens of shows getting cancelled and de-platformed, and an over-saturation of reboots and sequels over new original content. In 2023, the Writer’s Guild of America went on strike with the Screen Actors Guild quickly joining them on the picket line. One of their many demands was to keep AI out of the writer’s room and off the screen. The debut of ChatGPT, a chatbot used to produce written content, introduced a new work rival; one that doesn't need worker’s rights or a livable wage, and AI has already been implemented in film to de-age and resurrect bygone actors rather than casting new and budding ones. Many companies were quick to support and use AI as a replacement for human workers and still continue to encourage the development of AI. The quality may drastically drop, it’s more prone to error than humans because it can’t think for itself, and the lack of human regulation may breed unlawful outcomes, but it’s cheap and easy. The National Eating Disorder Association tried their hands at using AI to run their hotlines with swiftly dangerous results.

On Monday, activist Sharon Maxwell posted on Instagram that Tessa offered her “healthy eating tips” and advice on how to lose weight. The chatbot recommended a calorie deficit of 500 to 1,000 calories a day and weekly weighing and measuring to keep track of weight.

“If I had accessed this chatbot when I was in the throes of my eating disorder, I would NOT have gotten help for my ED. If I had not gotten help, I would not still be alive today,” Maxwell wrote. “It is beyond time for Neda to step aside.”

It should be obvious why using AI instead of a person for a crisis hotline is deeply apathetic and inadequate, even if it functioned properly. The need for human empathy and trust that the person on the other line specializes in your concerns is what makes crisis hotlines a relied source of help. Although there can be instances where AI can be helpful for human workers to work faster and more efficiently, having AI stand alone to nurture human concerns is prone to errors. Errors that can’t be allowed when a human life is at stake.

The state of the entertainment industry is still wary and as of now, animators are finally having their turn to strike. Animation has been hit the hardest amidst the further commercialization of the entertainment industry, as the medium has been continuously belittled and de-prioritized. This has made animation more “disposable” in the eyes of studio execs and it took the hardest hit as animated projects were snuffed and abandoned after Discovery absorbed Warner Bros. Workers of the animation industry are now fighting back but it’s hard to tell if their demands will be met when animation is already thought so little of.

This isn’t just me peering into the climate of AI as some third person entity. I, as I’m sure almost all of us have in some way, have had my life intruded by AI. I’m an animator myself who has been unemployed for the past two years since the layoffs started…

Time to get a little personal

I was only hearing about AI art from friends and seeing concerned posts from artists I follow. At the time, I couldn’t perceive what kind of threat AI posed and as generative AI was still rough around the edges, it was hard to fathom how invasive it could really be. My friend at the time had a positive outlook on generative AI, that it’d be an accessible method for people who struggled in projecting their ideas onto paper by digitally manifesting it. In a non capitalistic society, it’d be lovely to have everyone partake in and enjoy art without the threat of how it could interfere with our income. Sadly, we do not live in that society. When we came to realize that generative AI reaped artists’ works online and amalgamated them into new art pieces without artist’s consent, credit and pay, we quickly realized that AI was a plague to the art community. AI “art” continuously sprouted up like weeds as it became more popular; from AI bros selling stolen and unholy images of big-tittied, baby-faced anime women to scammers stealing scholarships and prize money by entering AI generated images into art contests. What was (and still is) a mere subject of mockery for AI’s wonky configurations and pathetic abuses of art now felt like a tangible danger to artists’ livelihood.

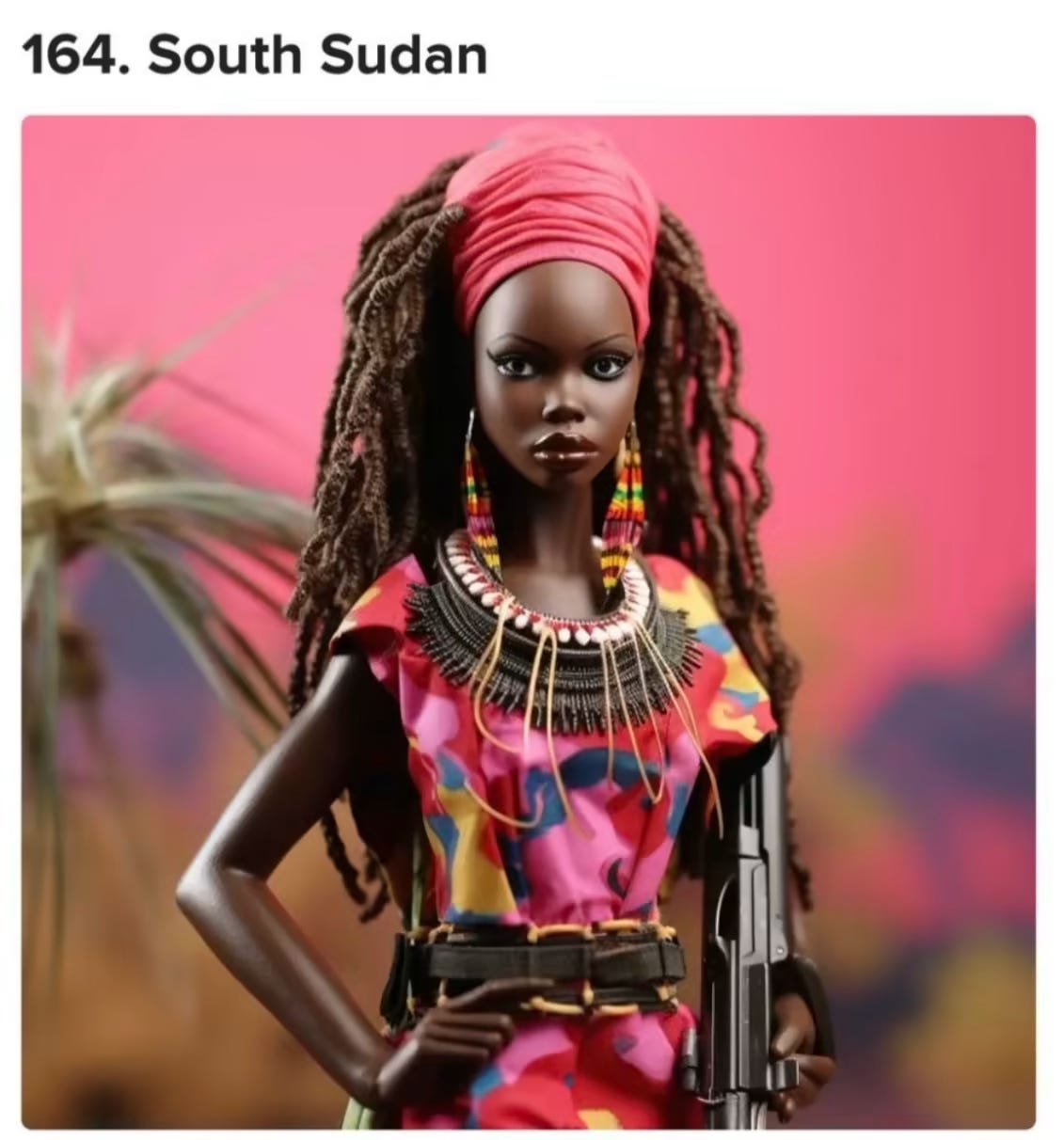

My sister’s job on the other hand is fact checking and editing educational books for children. Her nepotism boss pitched the idea of using AI photos instead of the paid for library of photos they already have, which houses photographers and allows them to pursue their dream job. He rightfully faced pushback from the staff with the response that it’d be incredibly damaging for the company’s image to use AI when their whole brand is making educational content. Using fake images, beyond the fact it would more than likely run into legal issues since the images would be made up of copyrighted materials purged from the internet, would also be highly susceptible to causing misinformation. If someone was shown an AI generated image of the streets of India and was told it was real, it would not only humiliate the company but it would also risk harmful and offensive misinformation. AI isn’t able to identify discriminatory stereotypes and it has produced deeply concerning and problematic material when it came to portraying anything outside of Whiteness.

It could just be due to my own algorithm where I felt that anyone with enough shame would avoid publicly using AI yet I came to realize that a lot of people are more than comfortable bolstering the future of AI. As I re-entered school to try and muster productivity back into my life and find a way back into the animation industry (I know, I’m stupid for trying), I came to realize many of my peers have and continued to use generative AI. I was baffled, I thought animation was one of the last places I’d see AI enthusiasts yet during a team assignment where we had to model a city, one of the teams used AI to generate their references. It was flat and the city visibly lacked elements that’d make it livable or appealing that the students didn’t seem to clock, bother fixing themselves, or didn’t know how (the result of relying on AI). This AI made city was brandished to a class of 30-ish creatives and artists who were putting themselves into debt trying to pursue a thinning career that currently has no job openings. In the same vein, my sister told me how one of her co-workers openly admitted to using chatGPT for work (the educational books company) and has been trying to push for AI to be integrated in the workplace.

It completely bewilders me because beyond the fact AI is prone to mistakes and crossing legal lines, I don’t understand why people are pursuing careers they can’t do themselves. If you’re a writer, shouldn’t you want to write yourself? If you’re an artist, shouldn’t you want to create your own art? Even if you’re using your skills to produce content for a client, which is admittedly more laborious than fun, shouldn’t your integrity as a creative make you flaunt your own talents? I had the privilege of getting to see James Baxter, a famous and talented animator who worked on films such as Beauty and The Beast, Prince of Egypt, Spirit, and The Hunchback of Notre Dame. When asked about his feelings on AI, James talked about the unease he felt about seeing AI being methodically integrated into studios. He had an interaction with an AI technician brought into his workplace who tried to assure him that AI was going to make it so he didn’t have to do the “hard stuff” anymore but James told us that that was what he liked about his job. Art has hurdles and working your way through it is what makes it ultimately satisfying. The payoff of animating or drawing something the way I envisioned it in my head is deeply gratifying. Even the stuff I still struggle in, as frustrating as it may be, I want to be able to succeed in it myself rather than just copping out and using generative AI. Leaning on AI and simply connecting dots laid out for me is a soulless task that I’m not eager for and makes me worry for the appearance and quality of future creative works as AI becomes more implemented.

A friend of mine told me how she used AI to generate a color pallet for her art because she hasn’t figured out color theory. Although I understand the argument that generative AI can be just another tool to aid artists, I thought about how I initially hated coloring in my own art. I’m still polishing my skill but as I learnt and practiced color theory, it became a whole new avenue of experimentation and expression that I came to love. Seeing my friend be unwilling to go through that experience herself because it was faster to generate a color pallet on the computer felt like she was missing out on an opportunity and stifling herself as an artist. I feel that as we become more and more dependent on technology, we’re losing our skills to problem solve and lowering our own standards about the product of our art. When technology fills in the holes for us, we settle for a sufficient product rather than an excellent piece that requires more labour. Although I argue that it’s fine to just meet the brief when working for a paycheck, I fear that we’re becoming less innovative in the wake of generative AI.

An AI rampant future seems imminent in our capitalistic system that jumps at the opportunity to use cheaper and cheaper tools. We already see it existing in corporeal products where our clothing, technology and house supplies have become more and more cheap, brittle, and unsustainable. This is a deliberate trade off of long-lasting high quality goods for cheaper and more disposable products so we’ll constantly buy more to replace and upgrade our already decaying purchases while manufacturers can spend less making it (at the expense of exploited outsourced workers in countries of color). The rapid pace of social media and the spoon feeding of shallow information has deteriorated our attention spans and media literacy skills (proven in how it seems that netizens do not actually know what media literacy means and use the term incorrectly to defend their favorite shows by accusing critics of “lacking media literacy”). Concerns for gen Alpha as they struggle in basic academics and language skills after having grown up on short-length yet fast-paced and over stimulating digital content like Cocomelon and predatory influencers like Mr. Beast spells out a future of technology-addicted and easy-to-capitalize-off-of consumers. I know it sounds like a conspiracy but when our current age is already easily persuaded by online trends and digital marketing, I can’t help but feel that corporations must be more than willing to foster an impulsive and gullible demographic, the same way they have with beauty standards in order to sell us patriarchal beauty products. I fear that when I can’t even seem to Google image search anything anymore without the top results being littered with AI generated images, that those much younger than me who won’t have the taught skills to differentiate them and will possibly end up in a time period where AI will seamlessly blend in with the real, are going to be easily taken advantage of by capitalistic predators.

so stop using generative AI!

You may be exempting yourself from the problem. You haven’t fallen for an AI generated picture (that you know of) and you’ve been using AI to make yourself harmless, self indulgent things like what it would sound like if Sabrina Carpenter covered a New Jeans song or what you’d look like as an anime character. However, every time you feed prompts into an AI generator, you expose unwilling artists and people into the system to be extorted. The more you use generative AI, the more skilled it becomes in camouflaging with the real. Meta has made it deliberately impossible to opt out of feeding their AI, John Green shone a light on how Google and Youtube is using influencer data to train AI, Reddit put up a paywall to sell their user’s posts to AI despite it infringing on the ownership of the users’ works, and Adobe has taken ownership of anything you create on their programs for their AI feature which crosses NDA lines. Whether or not you are willing to forfeit your information to feed AI, you must recognize that we deserve transparency and the ability to opt out if we want to. We have had so little say in our personal information proliferating the internet and being passed around by corporations to advertise curated products to us. It’s creepy and dystopian yet it’s our current normal because nothing is stopping them. We just sigh to ourselves as we accept a site we’re only intending to pass by to sell our “cookies” and we are quickly met with ads about whatever relevant search we just made. Even as I research and source news article for this personal vent and essay, I kept thinking “I wonder how this is going to change my algorithm for the worse”.

Although it feels nearly impossible to venture the web without having to “consent” to giving away your data, you can avoid using generative AI. You simply just don’t have to. You existed before generative AI and you functioned just fine without it. If you want to create something that you have turned to AI for, come up with different and more ethical ways to create it! Don’t let the allure of easy technology render you helpless; you are capable of coming up with creative solutions to creative problems. AI is currently undergoing its own hurdles as artists and creatives are fighting back as much as they can to protect their works and livelihoods. Don’t be complicit in the threat of AI, don’t be a contributor to it. Support people, resist AI, and constantly engage your critical skills to be a person who doesn't need to rely on big tech companies.